How Mobicom discovered 50% hidden traffic with BENOCS Analytics

About Mobicom

Mobicom Networks is a group company of Mobicom Corporation and Mongolia’s pioneer and largest mobile network operator, serving over 1.7 million subscribers across one of the world’s least densely populated countries. Established in 1996 as a joint Mongolian Japanese venture, Mobicom has been part of the KDDI Corporation Group since 2016. With network coverage spanning 95% of Mongolia’s territory, Mobicom delivers comprehensive telecommunications services including cellular communications, international connectivity (IEPL, IPLC), IP wholesale, Transmission lease service and datacenter services. Mobicom Networks operates three international PoP routers located in Hong Kong, Frankfurt, and Tokyo, providing transit connectivity between Mongolia, Asia, and Europe.

The Challenge: Operating with blind spots

Operating a nationwide mobile network in Mongolia presents unique challenges, but one critical issue that troubled Mobicom’s network was much closer to home, sitting right inside their own network infrastructure. Like many telecommunication operators, they deployed cache servers from throughout their network to deliver popular content directly to subscribers. These cache servers reduce bandwidth costs and improve user experience by serving content locally rather than through expensive international transit or private network interconnect (PNI) links.

However, their existing network monitoring solution had a significant blind spot. The respective solution is fundamentally built for security monitoring, specifically DDoS detection and mitigation. Because of this, it provides no visibility into traffic served by internal cache servers. These platforms focus on traffic entering and exiting the network at peering and transit points and generally do not collect NetFlow or IPFIX data from internal cache servers, since those servers sit inside the operator’s premises and don’t require the same level of DDoS scrutiny. The reasoning is sound from a security perspective, but this approach had unintended consequences for traffic engineering and business intelligence.

The impact was severe: Mobicom’s network operations team couldn’t see a substantial portion of their network traffic. They had no way to monitor cache performance, identify capacity constraints, understand subscriber behavior, or troubleshoot cache-related issues. Infrastructure investment decisions were being made based on incomplete data, and when subscribers reported streaming problems, the team had no visibility into whether cache servers were functioning properly.

Solution

What Mobicom needed was a solution built for comprehensive network intelligence, not just security monitoring. Enter BENOCS Analytics: Operational within Mobicom’s network since 2025, BENOCS now provides complete end-to-end network visibility, including the previously invisible cache infrastructure. The key differentiator is its ability to collect and analyze a multitude of protocols (Flow, BGP, SNMP, DNS and IGP) from the entire network, including cache servers within internal network infrastructure. It provides a fundamentally different approach to traffic analysis through its multi-dimensional Sankey visualization. The Sankey allows network operators to understand traffic flows across multiple dimensions simultaneously:

- Source and destination ASNs, including content providers

- Ingress and Egress routers and interfaces

- Source and Destination subnets and IP-ports

- Protocol and Service types

- Applications and Services

Implementation

BENOCS worked with Mobicom’s team to configure all protocol exports (Flow, BGP and SNMP) from all critical network elements. The key to revealing cache traffic was understanding that content provider caches are deployed in different ways across operator networks, each requiring different identification methods.

Different types of cache deployments:

Caches with BGP Peering Sessions – Some content providers deploy caches that establish full BGP peering sessions with the operator’s network. These caches export BGP routes with their own AS numbers, making them automatically identifiable through standard BGP AS-Path analysis. BENOCS ingests the BGP data and identifies traffic from these caches based on the AS number in the routing table.

Caches without BGP Sessions – Many caches are deployed without establishing BGP sessions, as devices sitting inside the operator’s network. For these deployments, identification relies on interface naming conventions in SNMP descriptions. BENOCS can assign Pseudo-AS numbers based on agreed naming patterns (like “Akamai-Cache-01”) in the interface. This allows operators to track cache traffic even when caches don’t participate in BGP routing.

Private ASN Configuration – Some operators configure Private ASNs (AS64512-AS65534 range) within their own routing infrastructure to break out cache traffic, mobile gateways, or enterprise networks. These Private ASNs are learned automatically through BGP routing just like public ASNs, providing seamless identification without requiring pseudo-AS configuration.

Through these identification methods, BENOCS Analytics successfully revealed cache infrastructure from Facebook within Mobicom’s network. Using BENOCS Analytics’ six-dimensional Sankey visualization, Mobicom could finally see traffic flows from all content provider caches through their network infrastructure to customer segments across different regions and time periods.

Results and benefits

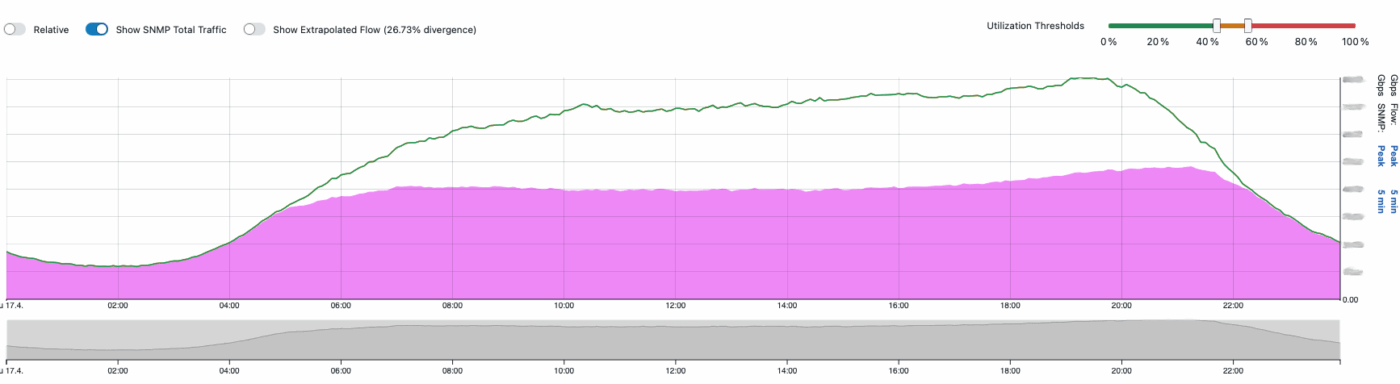

When BENOCS Analytics became fully operational and cache visibility was enabled, comparing the three months before to three months after revealed a stunning discovery.

Network traffic volumes increased by 50% and by 70% during peak hours.

This wasn’t because subscribers suddenly consumed more data, but it was simply invisible in their previous monitoring solution. All this traffic flowing through Mobicom’s network had been completely hidden from operational view.

With complete visibility, Mobicom gained transformative insights:

- Cache efficiency visibility: Analysis revealed an impressive 1:3 ratio between transit and cache traffic, i.e. for every 100G total traffic, 75G of traffic was served from local caches directly to subscribers. This demonstrated that 75% of content delivery was being handled locally, although some caches required cache-fill traffic of 25%, which lowers the total cache efficiency.

- Proactive capacity management: The team could identify when cache infrastructure was approaching capacity limits and coordinate with content providers to address utilization bottlenecks. This prevented customer experience issues before they occurred.

- Data-driven operations: Capacity planning and infrastructure decisions could now be based on complete traffic volumes rather than partial data. Lead time to resolution improved drastically when diagnosing performance issues.

- Content provider collaboration: Mobicom was able to have data-driven conversations with Facebook about cache performance, traffic localization and capacity planning. These discussions were now supported by precise traffic measurements and utilization metrics.

Recent improvements

Building on this success, Mobicom is working with BENOCS to implement the Application Identifier module, which analyzes DNS cache misses from their DNS resolvers to identify specific applications delivered through all CDNs. This DNS-based correlation will enable Mobicom to see which CDNs are being used to deliver applications, like Disney+, TikTok, Amazon Prime etc. into their network, providing application-level intelligence that complements their existing network-level visibility.

Conclusion

Mobicom’s journey from having 50% of their network traffic invisible to achieving complete end-to-end visibility demonstrates a fundamental truth: you cannot effectively operate, troubleshoot or optimize infrastructure you cannot see.

With BENOCS Analytics’ intelligent cache identification methods and comprehensive network intelligence, Mobicom now has complete visibility of their network, enabling proactive management, accurate capacity planning, and better service delivery.

For telecommunications operators facing similar challenges surrounding incomplete network visibility, especially concerning content delivery infrastructure, Mobicom’s experience offers a clear lesson: security-focused monitoring tools excel at protecting network perimeters, but comprehensive network intelligence requires purpose-built solutions that reveal the complete picture of your network operations.