The Internet is a large and complex system that requires experts across diverse backgrounds to function. Common knowledge right? We think the same logic should apply to the different network analytics tools.

When it comes to choosing the analytics tool that is right for your networks, you might be enticed by companies that claim to be a “jack-of-all-trades”. This may sound like the best deal on multiple levels e.g. installation, hardware, fees, etc., but different types of networks and different functions to run a network require different types of network analytics. Would you build a house with a Swiss Army Knife or with a tool box filled with high quality tools?

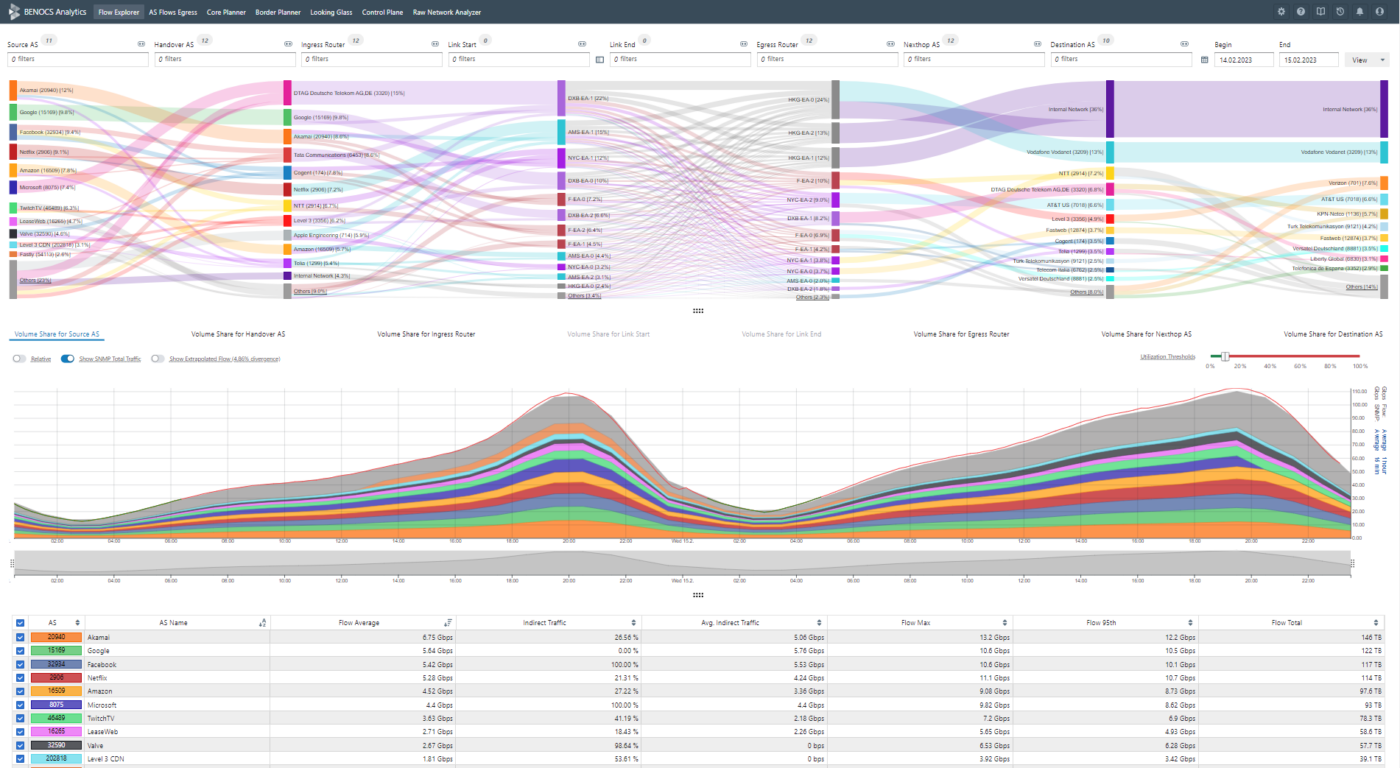

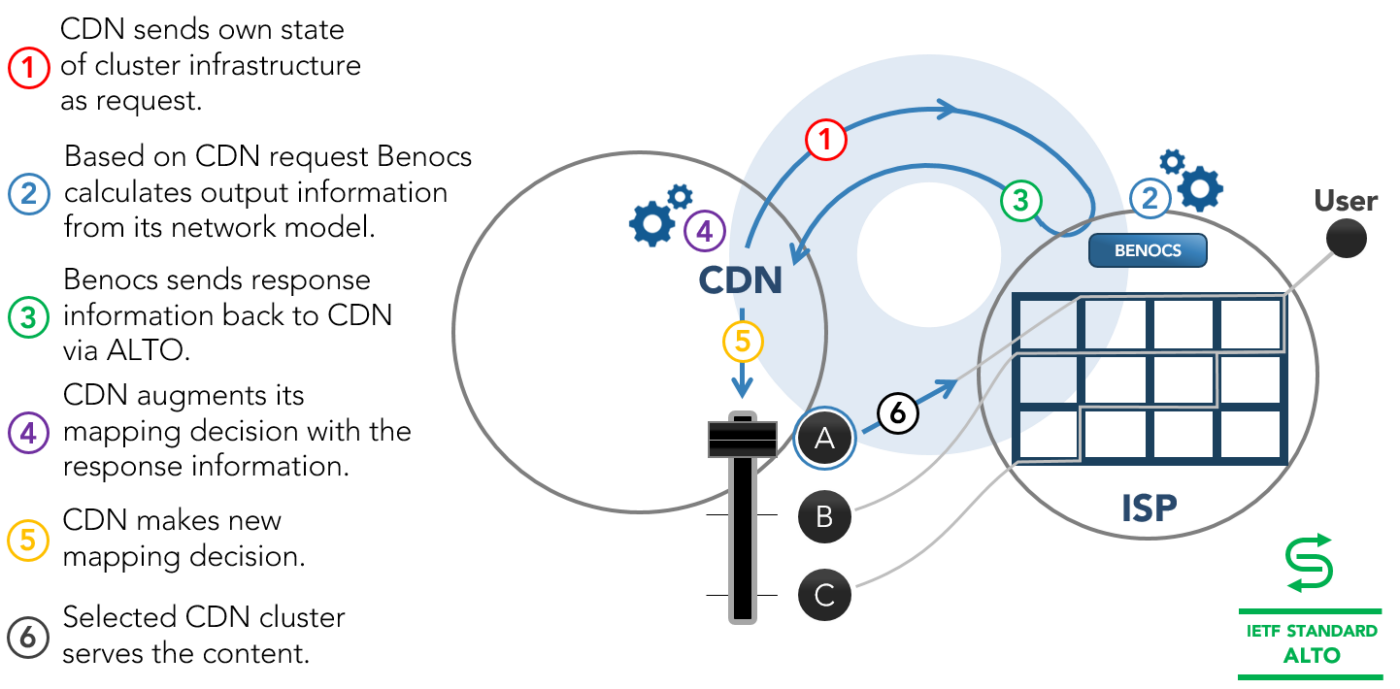

With different departments such as network architecture or network security requiring different information, it always seems like the perfect solution is one that can provide features for both of them. What networks really need, however, are several different specialized tools for the same price or less. BENOCS Analytics offers customers a specialized tool that focuses on displaying large network traffic flows for peering optimization, network operations and maintenance.

If you're going to spend the time and money, spend it well

If you were to start researching for network analytics tools for enterprise or service providers, network security or DDoS defense, etc. you would find a lot of those products on the same website from the same company. Provided the costs of installation, hardware, operations, and not to mention the time spend just researching the products, these products often feel like the best deal. In the end, you may, however, end up with a tool that may only slightly improve your company’s performance.

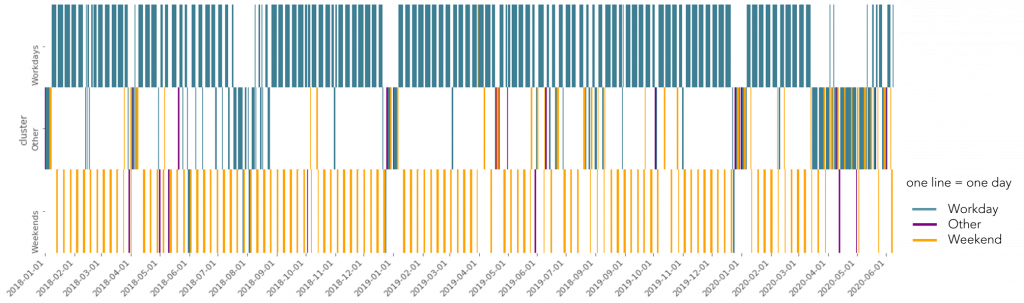

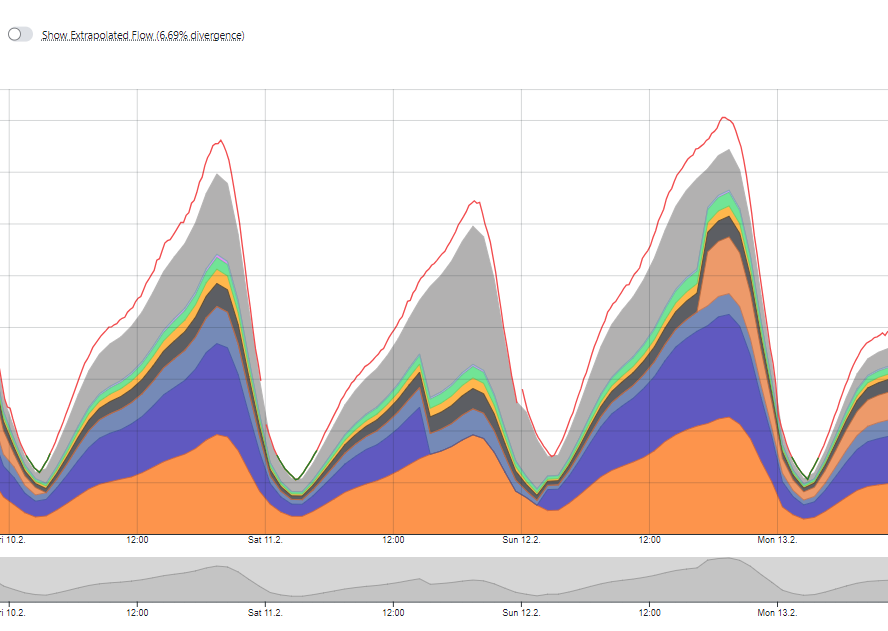

At BENOCS, we focus on service provider analytics. Our analytics collect a combination of Netflow, BGP, IGP, DNS and SNMP from your network, aggregate it, and present it to you in a way that makes the most sense to network engineers, peering and transit managers and quality assurance departments. Our data model makes queries zippy, history accessible, overview holistic and hardware affordable.

A company that focuses mainly on DDoS protection, on the other hand, extracts more refined data from the network and presents it in a way that makes the most sense to best identify and combat a DDoS attack the moment it hits. This is a completely different specialization than the one required for network optimization and that would only benefit your network security department.

Remember that Swiss Army Knife we mentioned earlier? A great tool for putting together a temporary shelter in the woods during a weekend camping trip, but not for professional craftspeople building a sturdy house. Your employees are craftspeople looking to build and maintain a house. For the same price as buying them all a Swiss Army Knife, you could invest in a tool box filled with specialized tools to fit their individual needs.

Start filling up your tool box

When searching for the right analytics tools to meet your company’s needs, purchase the highest quality tool each company has to offer, whether it be DDoS protection, network security, enterprise network analytics, or anything else and get BENOCS Analytics for your service provider’s network analytics.

Our customers have done the same. Not only have they saved money on service fees, but they have also seen vast improvements in the performance of their employees, which has trickled down to the performance of their network. You know what they say: Good network performance leads to happy customers.