Berlin, Germany – June 19, 2020 – BENOCS GmbH announced today that they are now officially running in the KPN network, providing KPN with detailed network traffic visibility via an easy-to-use user interface. By installing BENOCS Analytics, KPN has acquired a high performing tool that gives them the ability to monitor traffic, troubleshoot problems, seek new business opportunities and more.

Before installing BENOCS, KPN had to rely on data from several legacy tools, which was costing them time and effort for populating information. They also desired a quick and intuitive way to view traffic flows for forecasting, optimizing peering relationships as well as to guarantee effective and quality routing to end customers. “We are very glad to be providing KPN with the network visibility they need in order to optimize their network and QoE,” stated Stephan Schroeder, CEO of BENOCS.

When it came to the actual set up of BENOCS Analytics, it “went very quickly. The BENOCS Engineering team provided fast and responsive support and are very knowledgeable in their field. It is always a pleasure to work with such a team,” stated KPN Network Specialist, Joris de Mooij.

By installing BENOCS Analytics on premise, KPN is able to fulfill strict security requirements, which prevent them from exporting any data outside of their network.

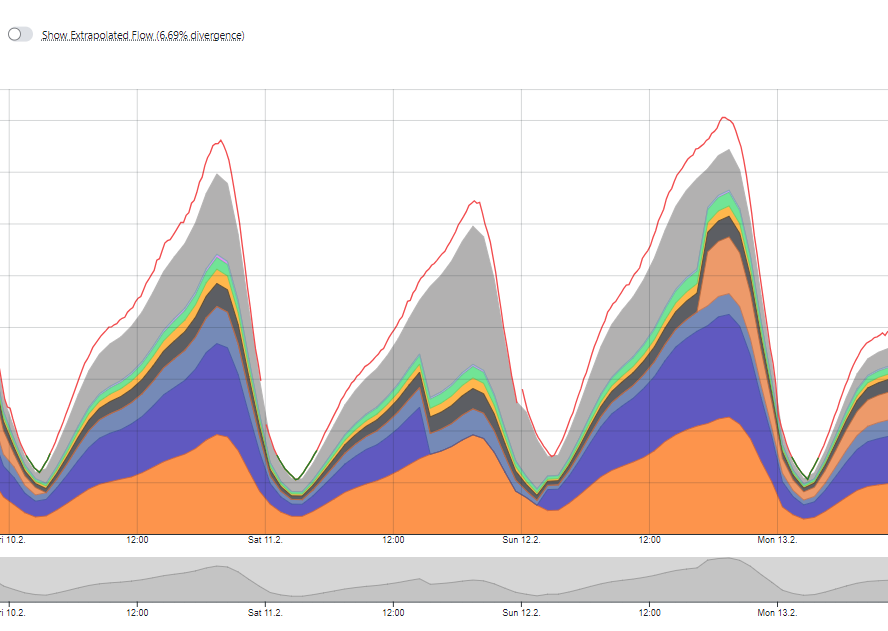

Furthermore, installing BENOCS Analytics could not have come at a better time for KPN. Just as BENOCS was installed and running, internet traffic began to reach record levels as countries began to lock-down due to COVID19. “We had to do some smart upgrades to maintain high quality routes for our end users. BENOCS was of crucial importance in this process. It also helps us with capacity forecasting,” said Interconnection Manager at KPN, Rob de Ruig.

About BENOCS

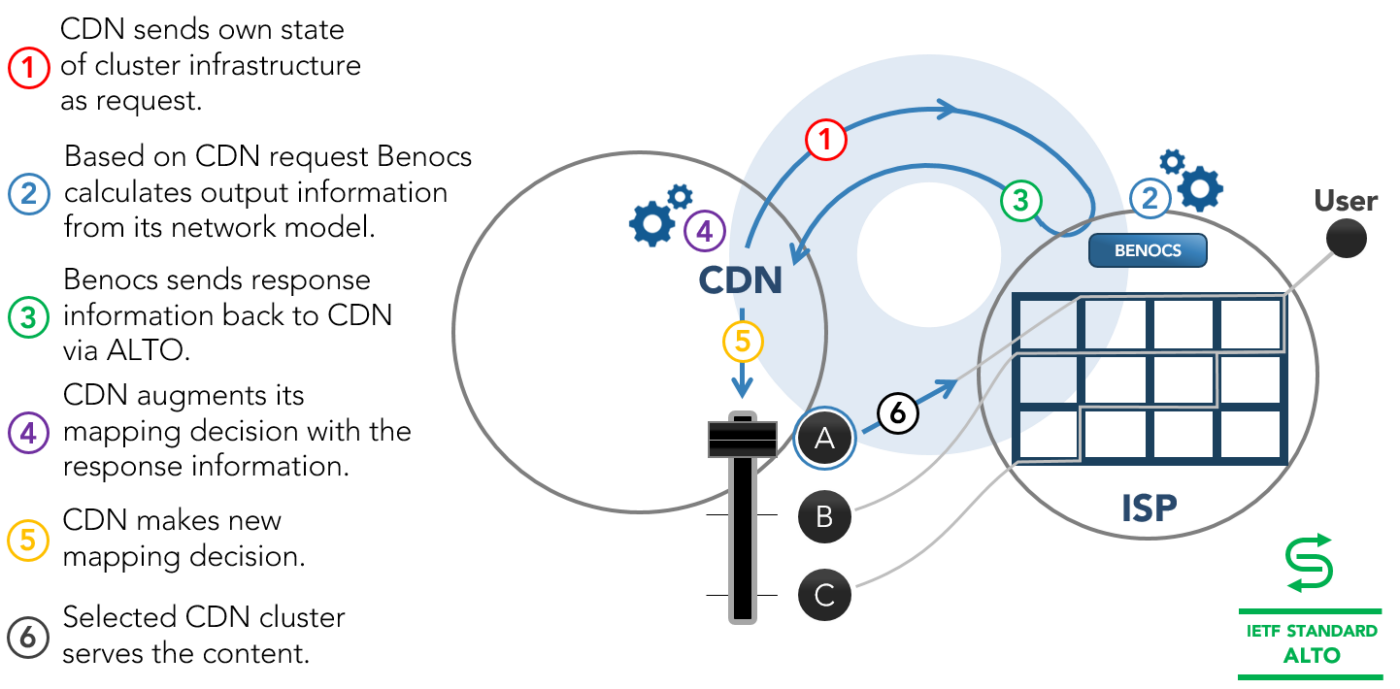

BENOCS GmbH – a spin-off of Deutsche Telekom – is a small company with big plans to revolutionize the way network traffic is managed. Their intelligent and fully automated solutions fit networks of any size and provide ISPs as well as CDNs strategic ways of coping with growing network traffic. With BENOCS Analytics, network operators, transit and wholesales carriers, Hosting and CDNs gain end-to-end visibility of their entire traffic flows.

About KPN

KPN is a leading telecommunication and IT provider and market leader in the Netherlands. With fixed and mobile networks for telephony, data and television, they serve customers at home and abroad. KPN focuses on both private customers and business users, from small to large. In addition, they offer telecom providers access to their widespread networks.